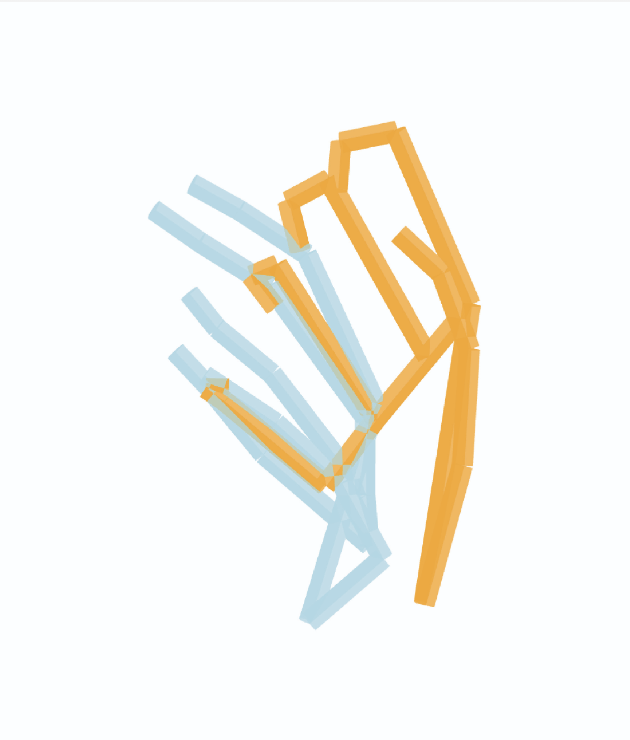

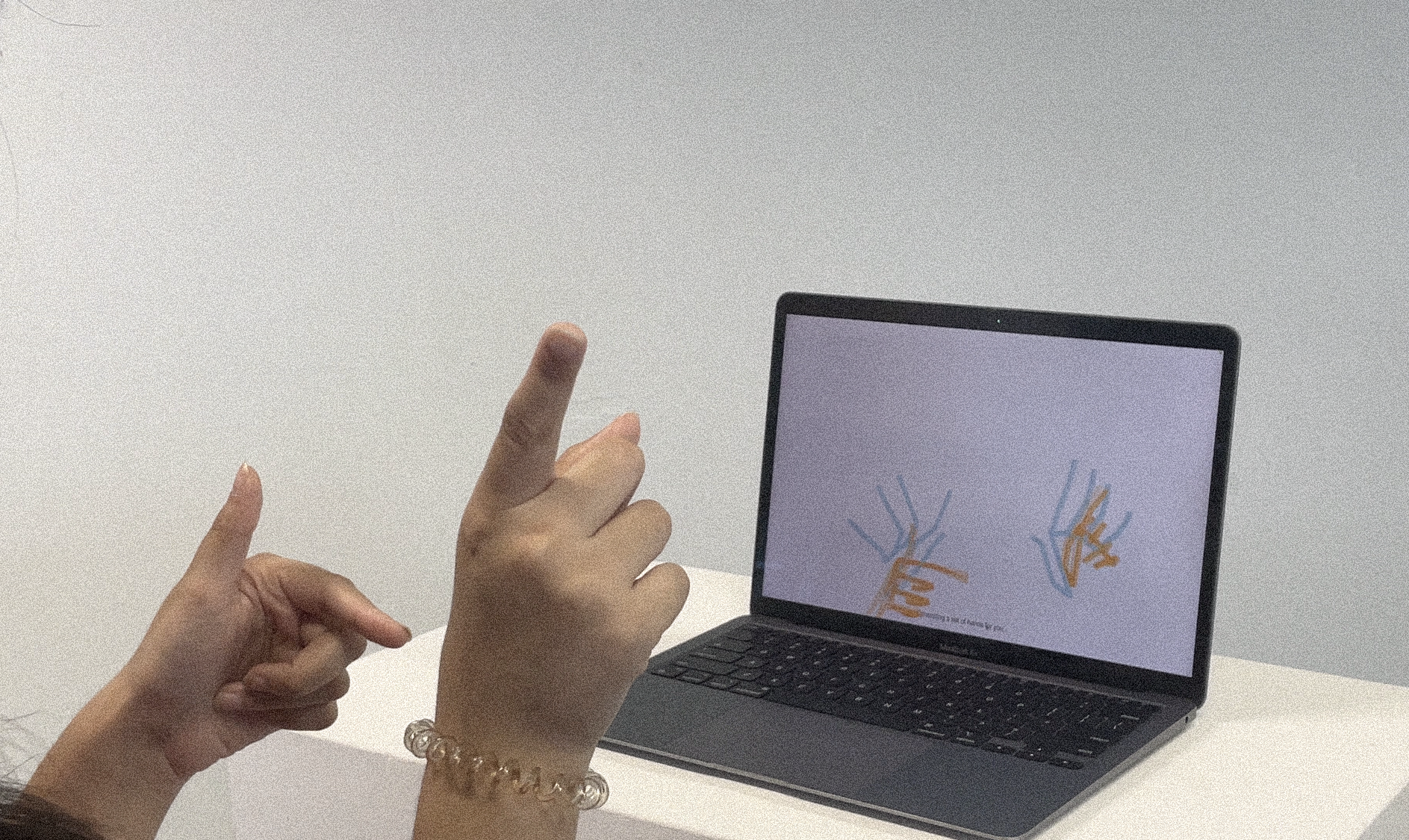

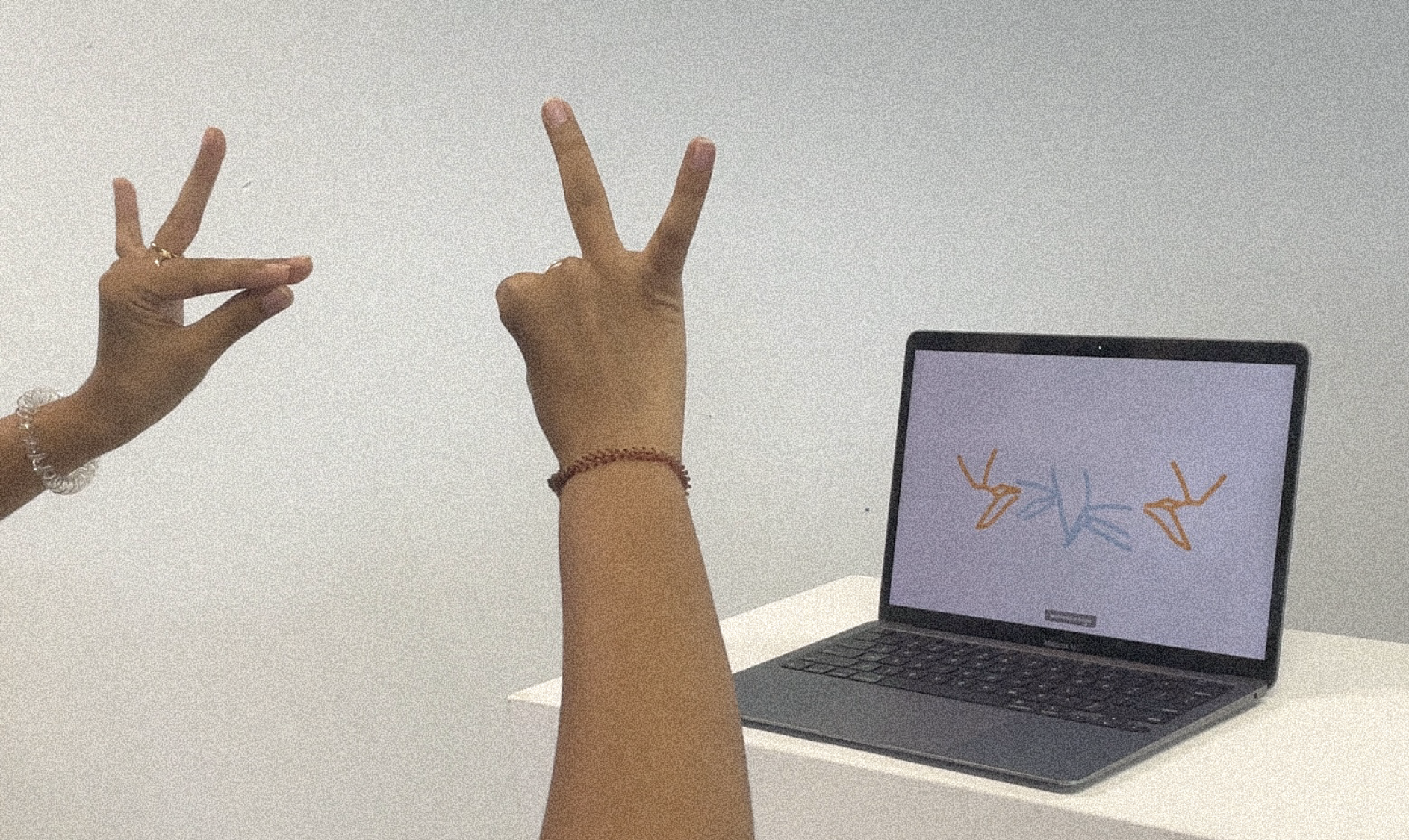

handInHand() explores new forms of human-machine interaction through embodied gestures. Using hand motion detection, user gestures are sent to the Gemini API, which responds by generating virtual hands that react in real time. This interaction creates an evolving, choreographed exchange where human gestures and machine responses harmonize. By transforming gestures into a shared creative language, the project examines intuitive, expressive interactivity and the potential for machines to act as co-creators in creative processes.

Process

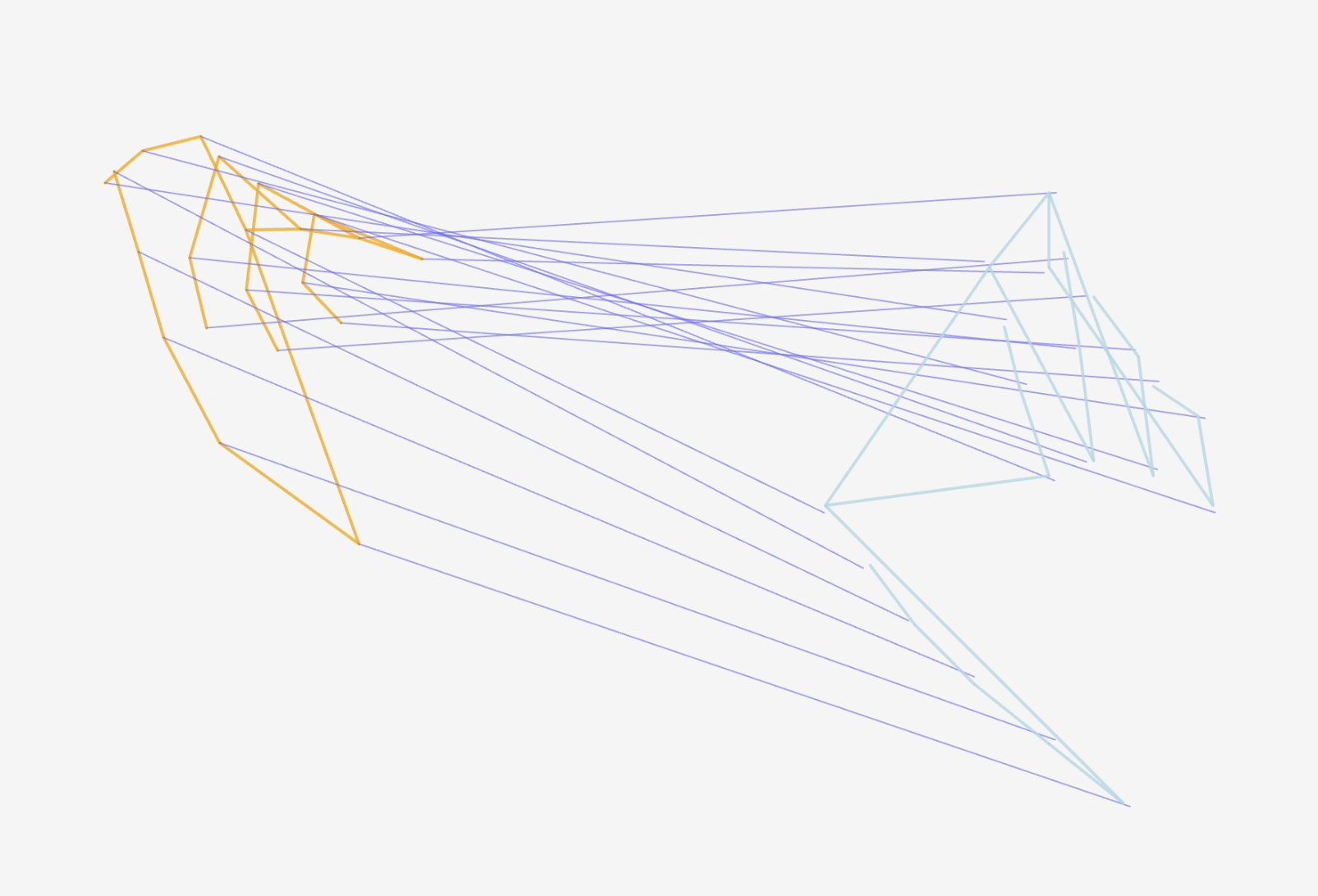

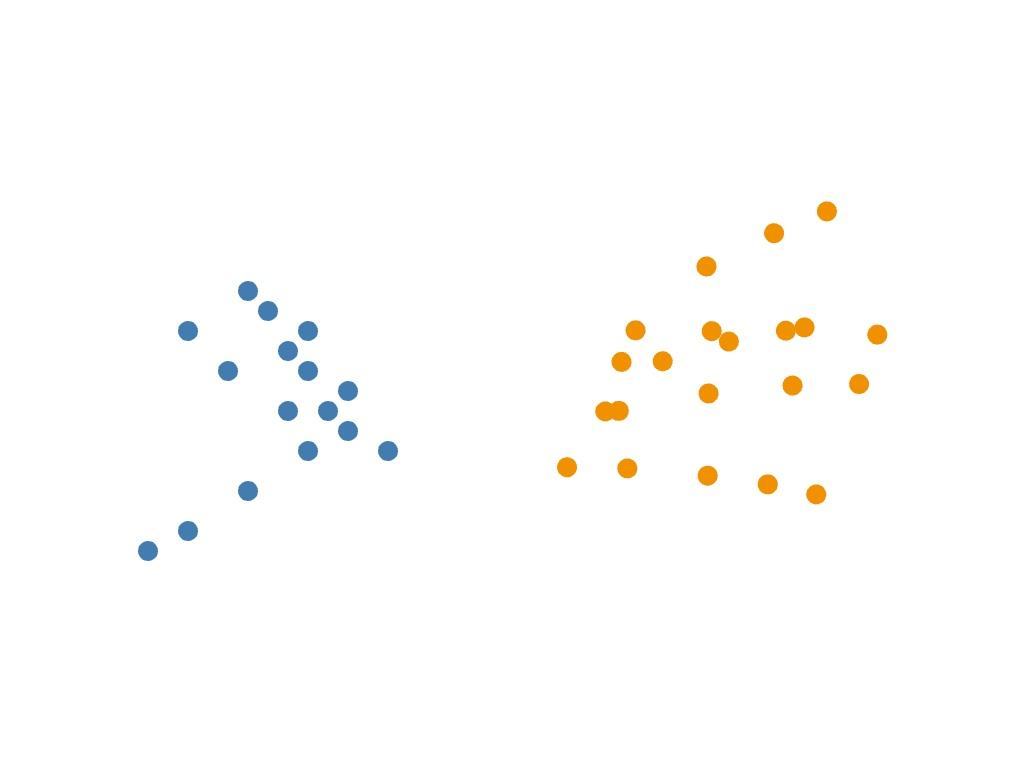

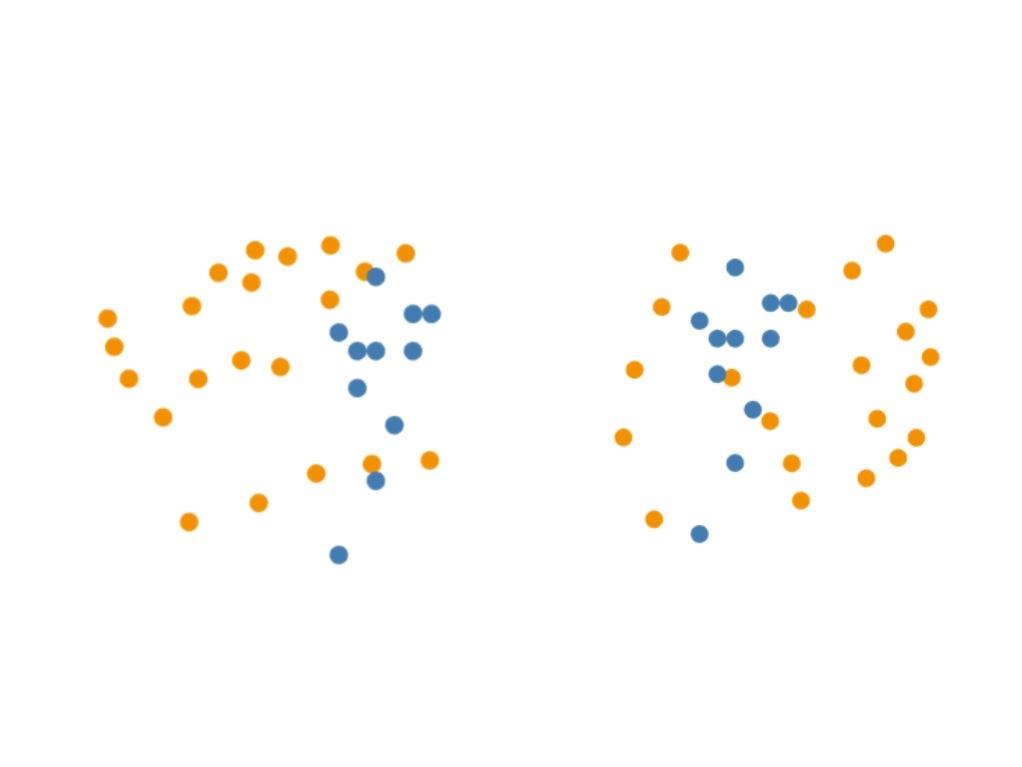

The development process began with integrating the Gemini API to generate 21 keypoints in the shape of a hand, triggered by user input. Experimentation with AI responses led to intriguing outcomes, including gesture-based interactions that appeared to mimic movement or reach toward the user’s hand. Further exploration involved prompting the AI to generate textual reflections based on these interactions, expanding the project’s conceptual scope. Visual refinements, including the implementation of 3D geometry, enhanced the overall experience, making the interactions feel more fluid and immersive.

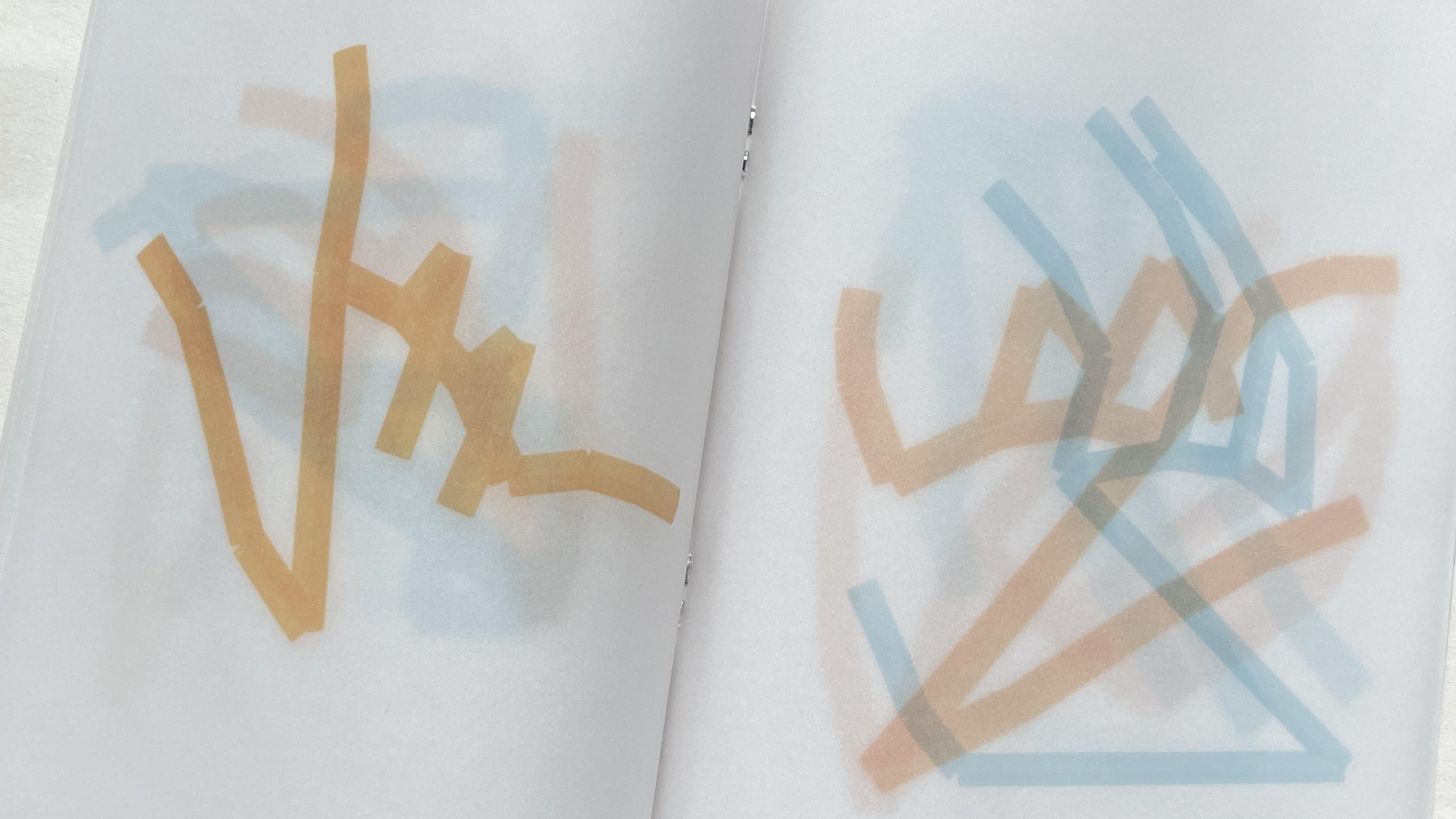

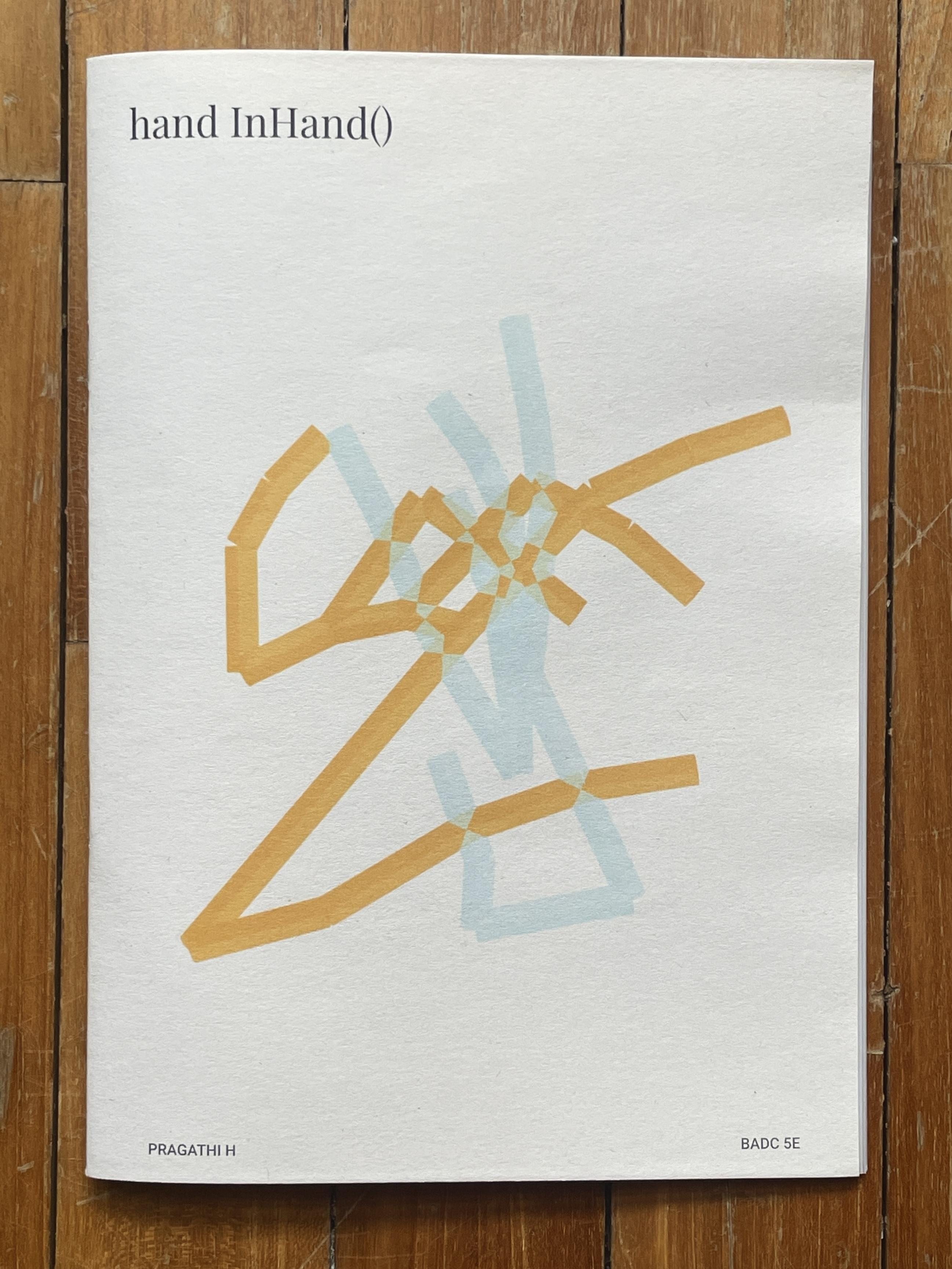

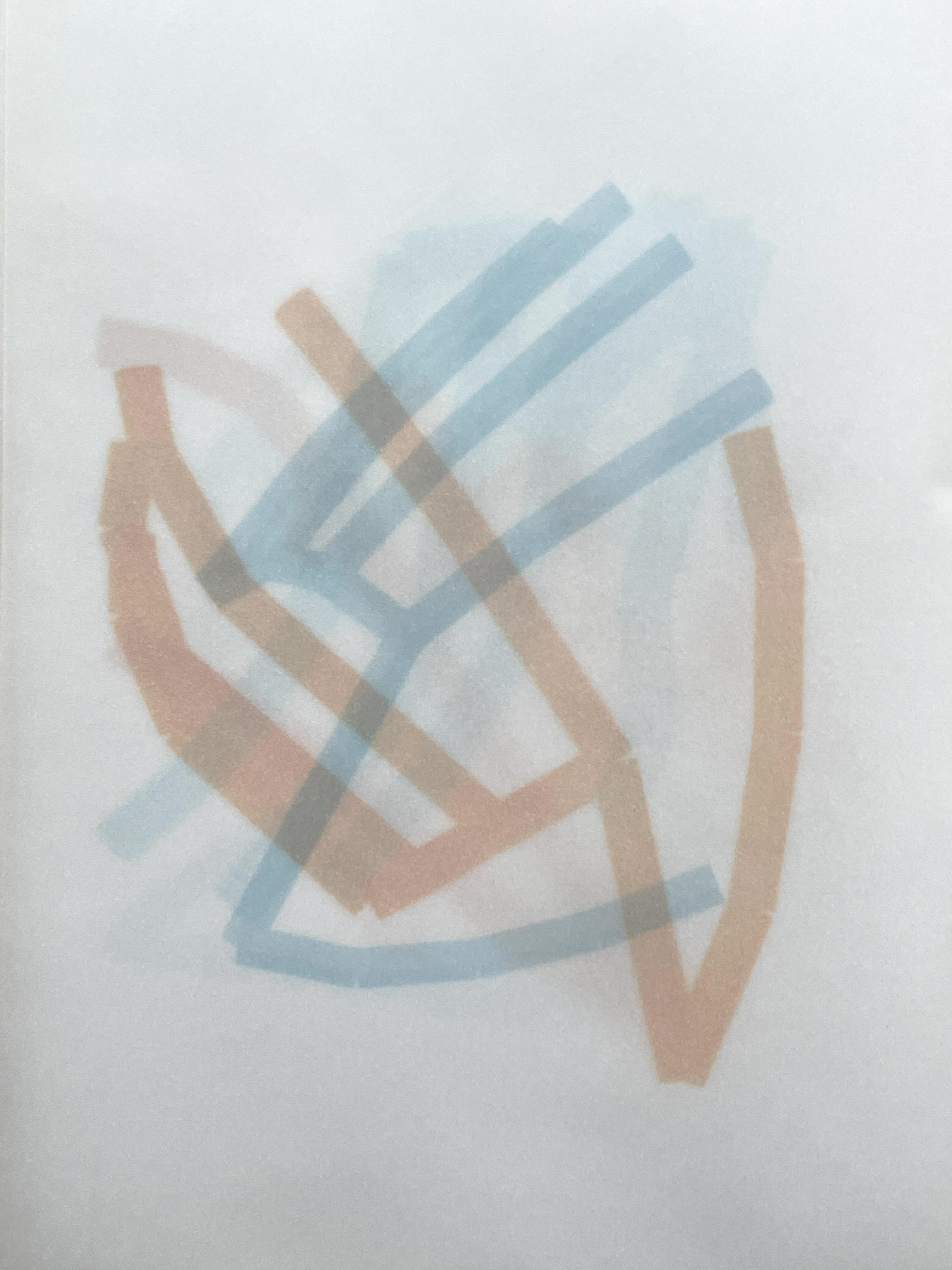

Publication

To expand on the project, a publication was designed using transparent sheets to overlay different AI-generated hand reactions onto various positions of a human hand. This layering technique created a tangible representation of the evolving interaction between human gestures and machine responses, visually capturing the dialogue between movement and artificial intelligence. The publication served as both a documentation of the process and an extension of the project's exploration into the expressive potential of AI-driven gestures.